Tiers and tables and more! Oh my!

Which tables can I send to the Sentinel data lake?

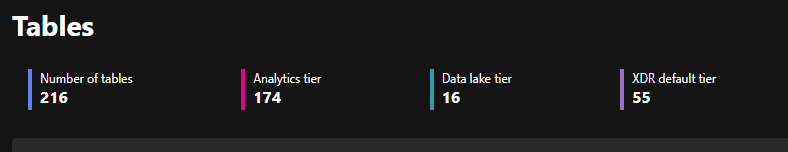

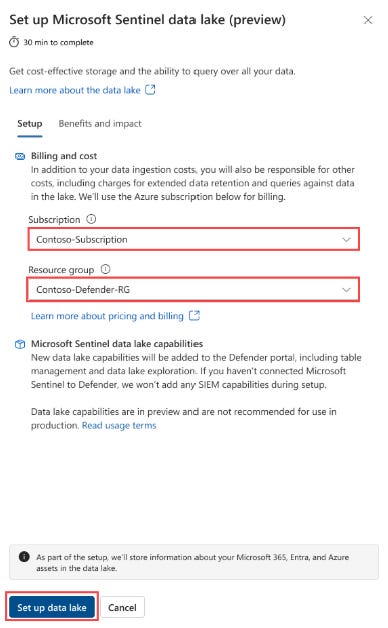

If you’ve enabled the new Sentinel data lake, you’re probably already checking out thew new Tables blade in the Defender Portal. This is where you can choose where to send your logs — to the Analytics tier or the Data lake tier. But there is also a set of tables called the XDR default tier.

In this post, we’ll explain what these tiers mean and how you can use them.

The Analytics tier is where you put data that you want available for incident response, alerting, hunting, workbooks, etc. This is what is often referred to as “Log Analytics”.

The Data lake tier is the low-cost "cold" tier. Data in the data lake tier isn't available for real-time analytics features and threat hunting. But you can access data in the lake whenever you need it through KQL jobs, analyze trends over time by running scheduled KQL or Spark jobs, and aggregate insights from incoming data at a regular cadence by using summary rules.

If you change a table’s tier from Analytics to Lake-only, existing analytics features (e.g., analytics rules, hunting queries) will no longer work for that data. The platform will warn you before making the change. But MORE IMPORTANT - the minute you click the button that enables the data lake, you’ll also lose any discounts that you had on retention.

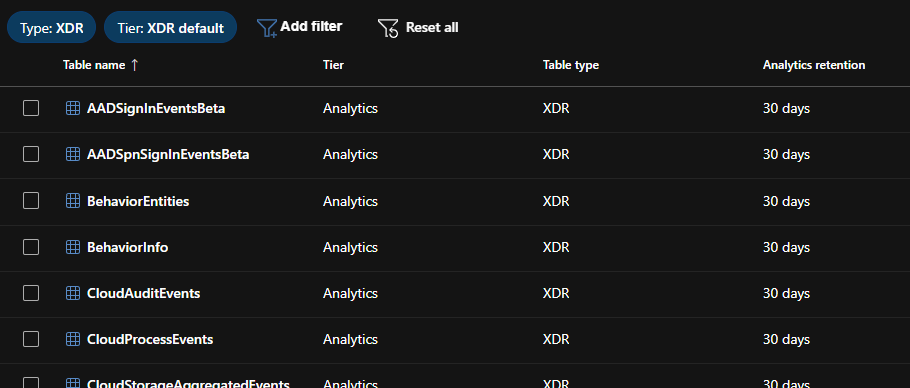

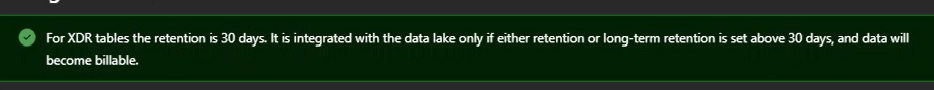

The XDR default tier contains the Microsoft Defender XDR threat hunting data which includes 30 days of analytics retention, included in the XDR license. You can extend the retention period of supported Defender XDR tables beyond 30 days. When you extend the retention period of supported Microsoft Defender XDR tables Microsoft automatically creates the table in your Microsoft Sentinel workspace in the analytics tier.

Which tables can you send to the data lake?

But it’s super important to know which tables can be “data lake only” and which cannot.

Any table with Type: XDR and Tier: XDR default, cannot be moved to the data lake at all. These are XDR tables with the standard 30-day retention. (See chart at end of post)

If you haven’t sent your XDR data to Sentinel, you will need to do so in order to change the Total retention period which will then send the data to the data lake instead of to “archive”.

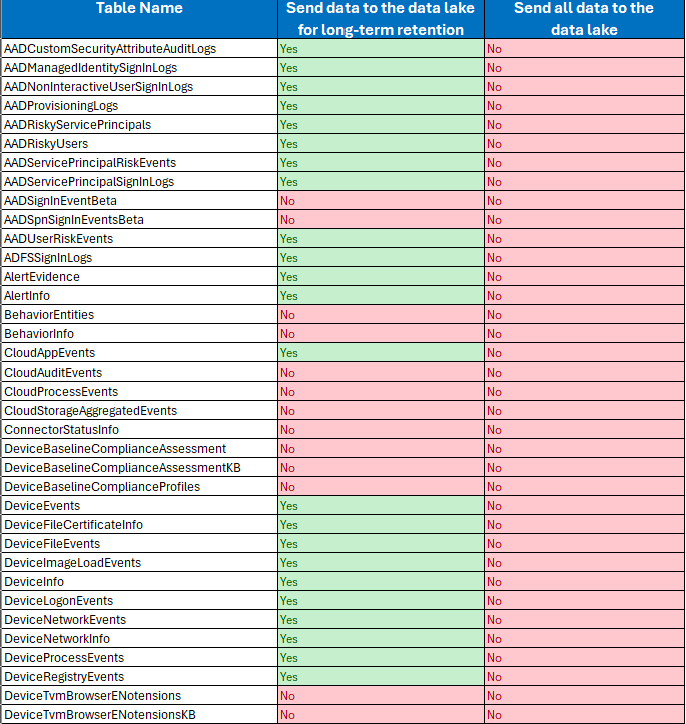

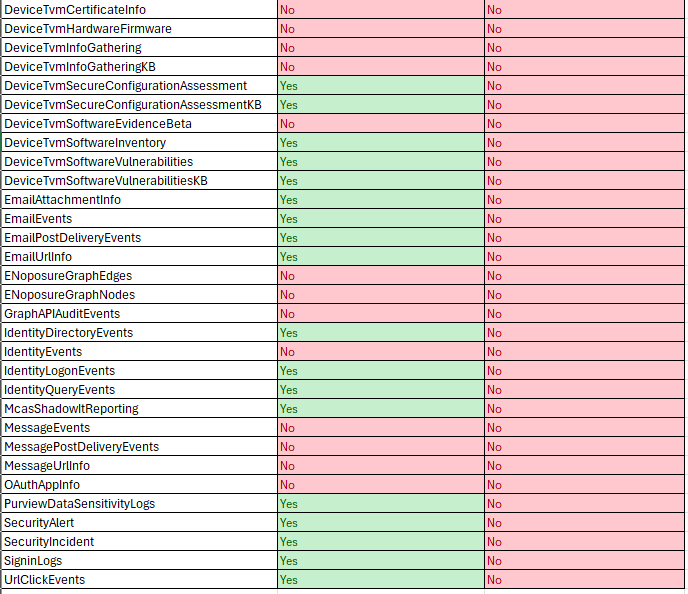

Below, is a list of what I’m calling the “default Microsoft” tables. This table shows which tables can be sent to the data lake and which cannot. We’ll have a follow up post about the “non-Microsoft” tables.

Is the Data Lake layer based on the same mechanism as archived logs stored long-term in a Log Analytics workspace?

Also, is it more cost-effective to store logs in the Data Lake layer?

If there are advantages in terms of long-term retention and lower cost, I’d like to consider proposing it to our customers.

Does this mean that I still need ADX if I want to keep DeviceTvmCertificateInfo data beyond the analytics tier retention period?